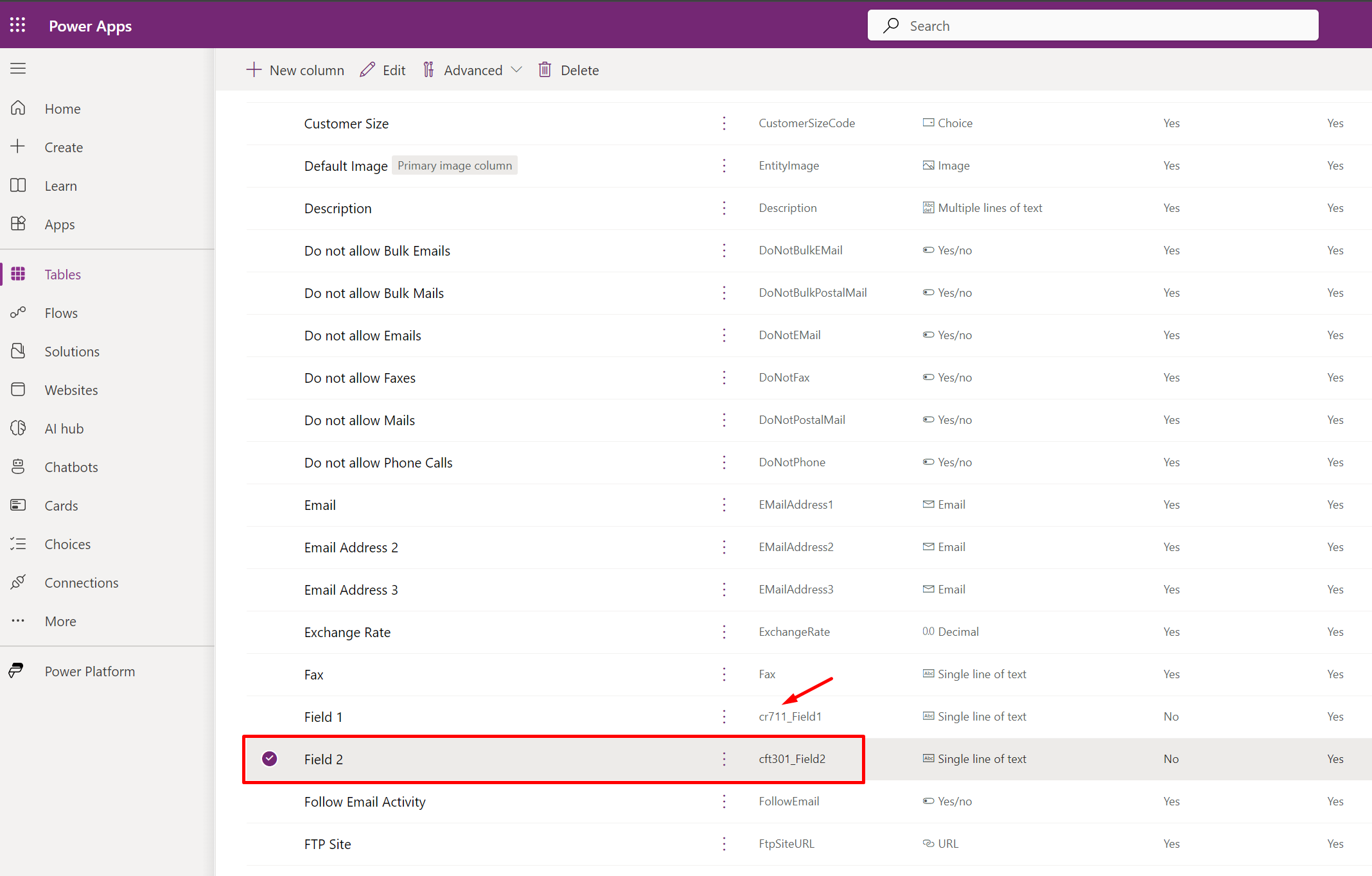

So, if you are used to updating Icons to entities in the classic UI, here’s what you need to do in order to update the SVG image of a Custom Entity you just created using new Power Apps Maker portal.

Let’s see below is you custom entity and it comes with its default icon which you want to set to a custom SVG icon.![]()

Adding SVG Icon to Custom Entity

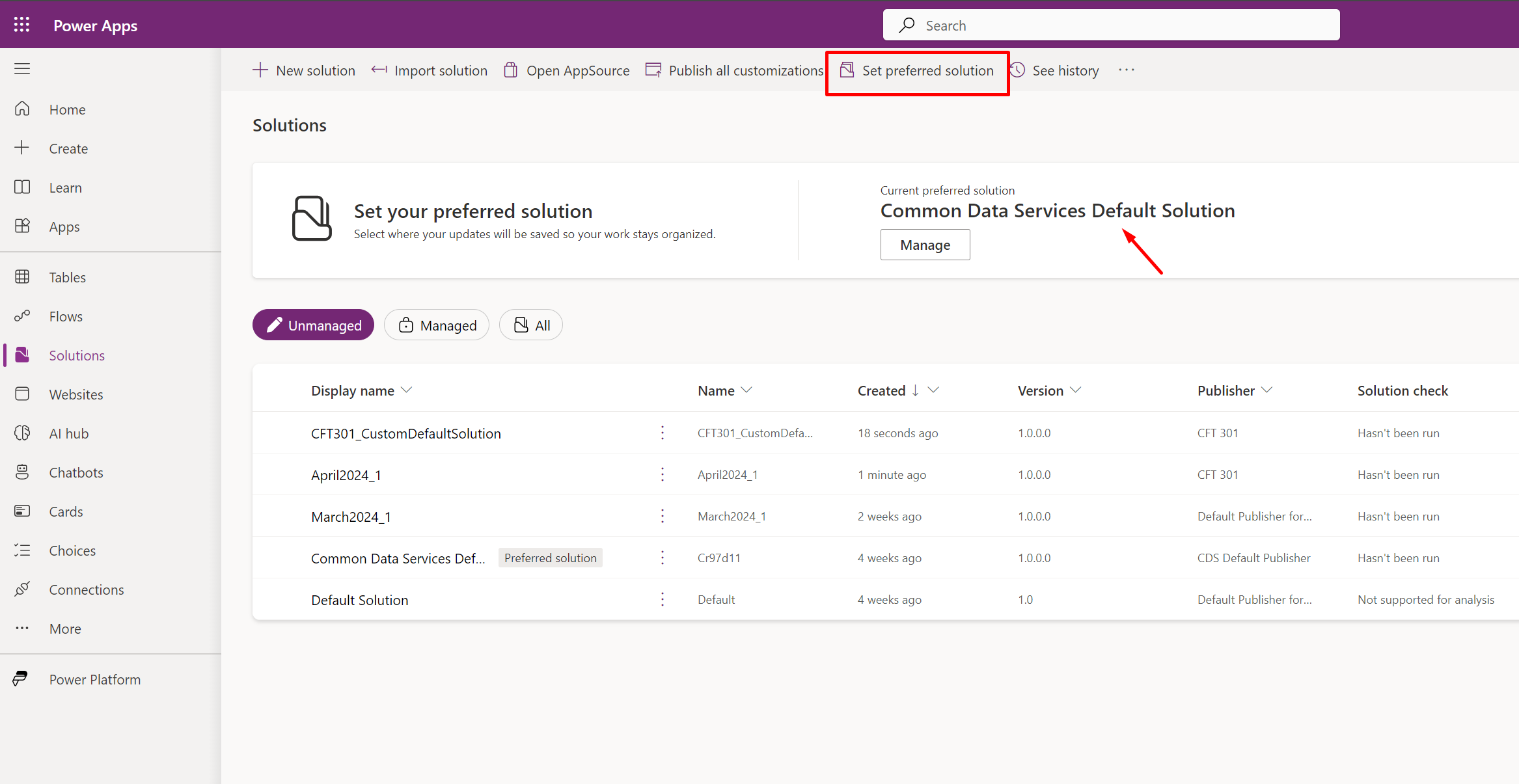

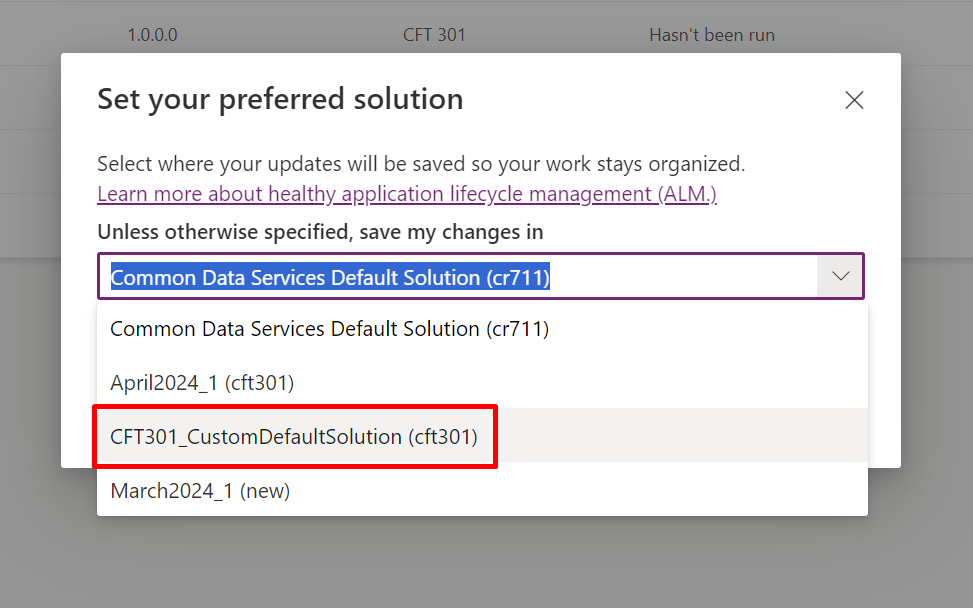

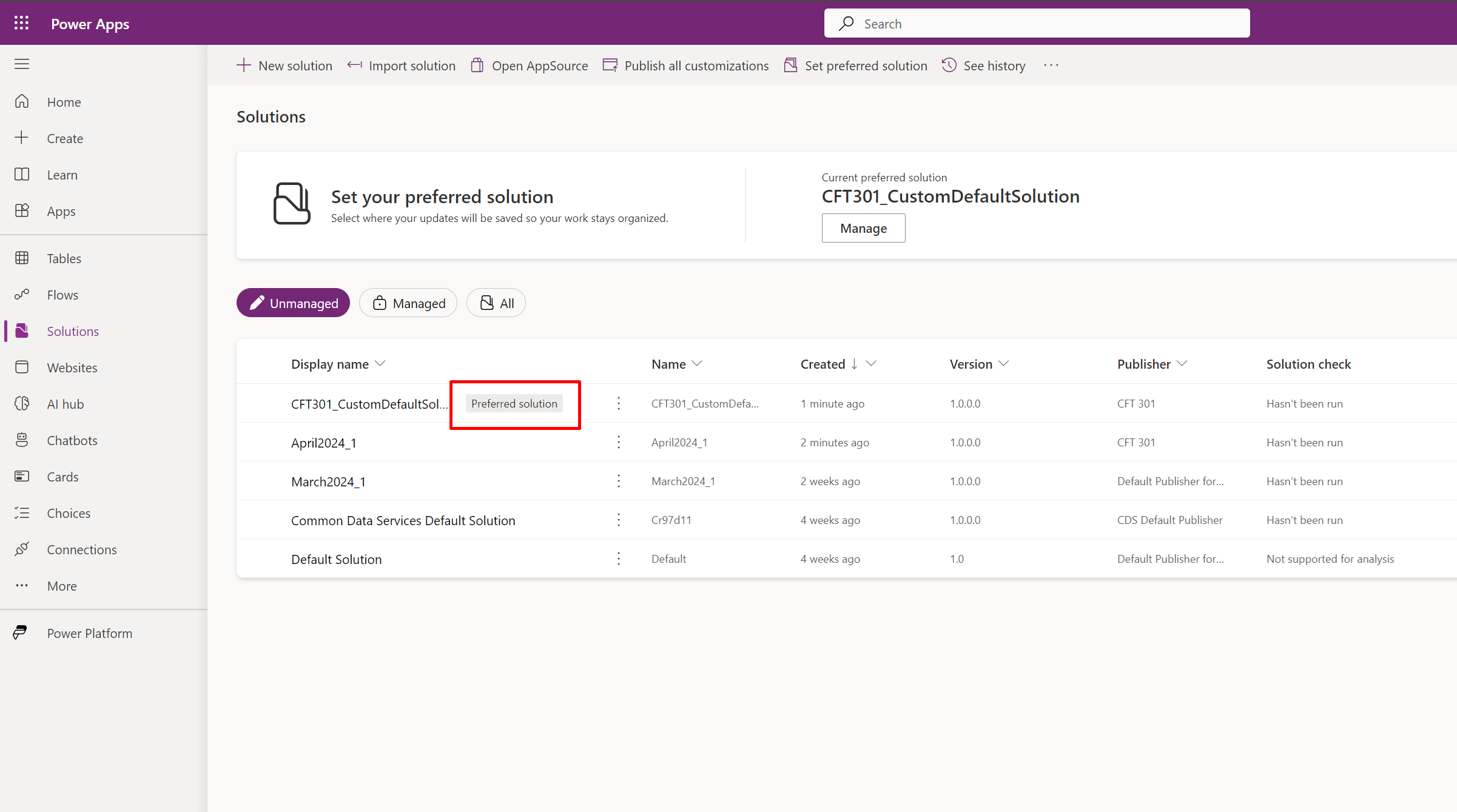

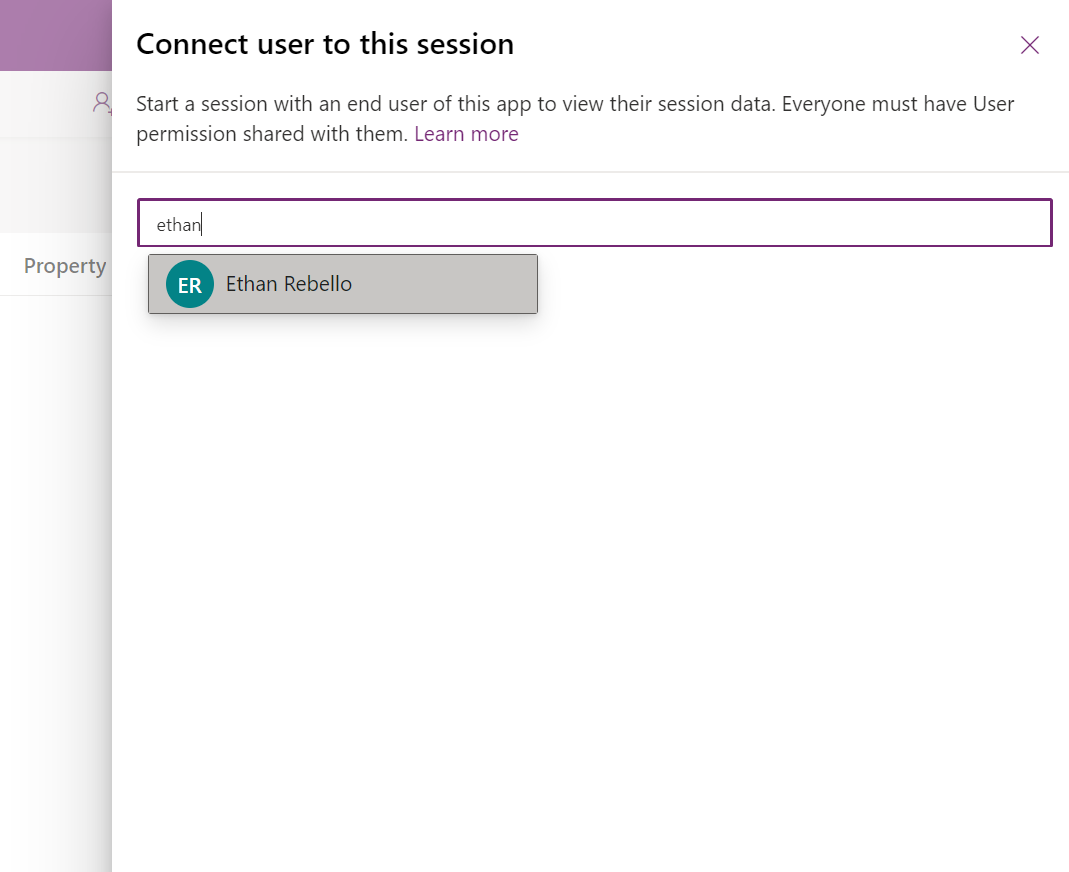

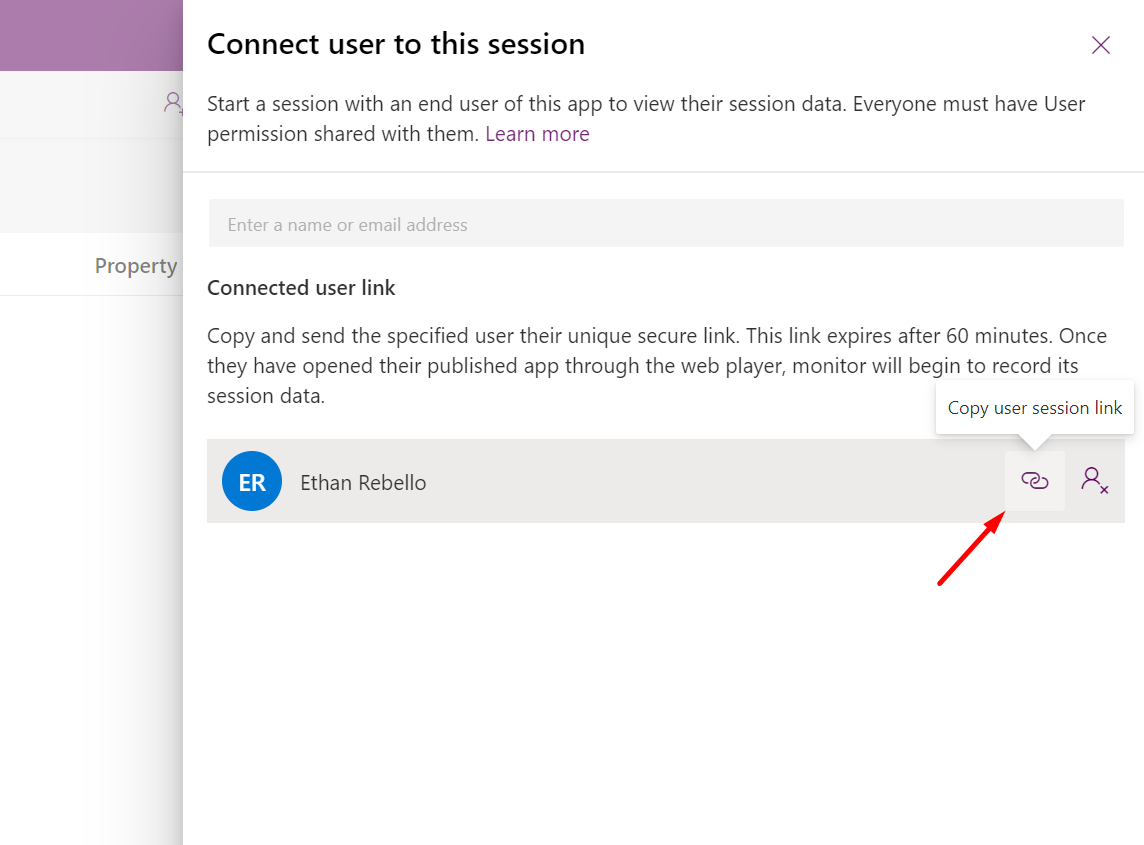

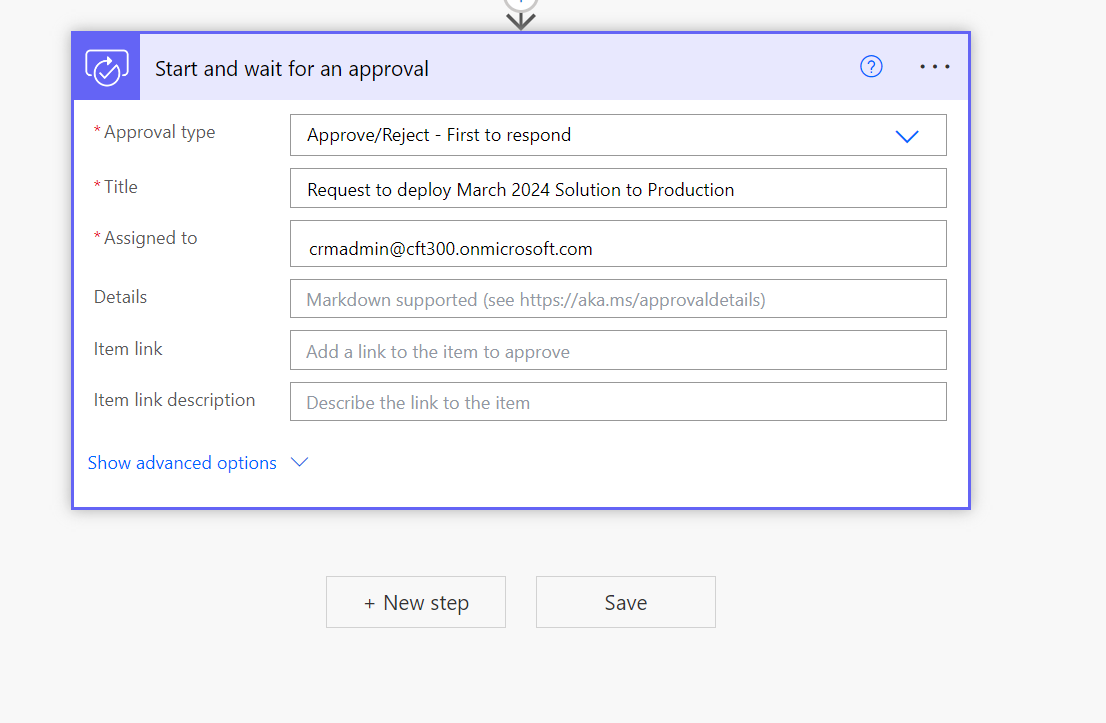

Given that you have appropriate access to the be able to Customize the system, follow the below steps –

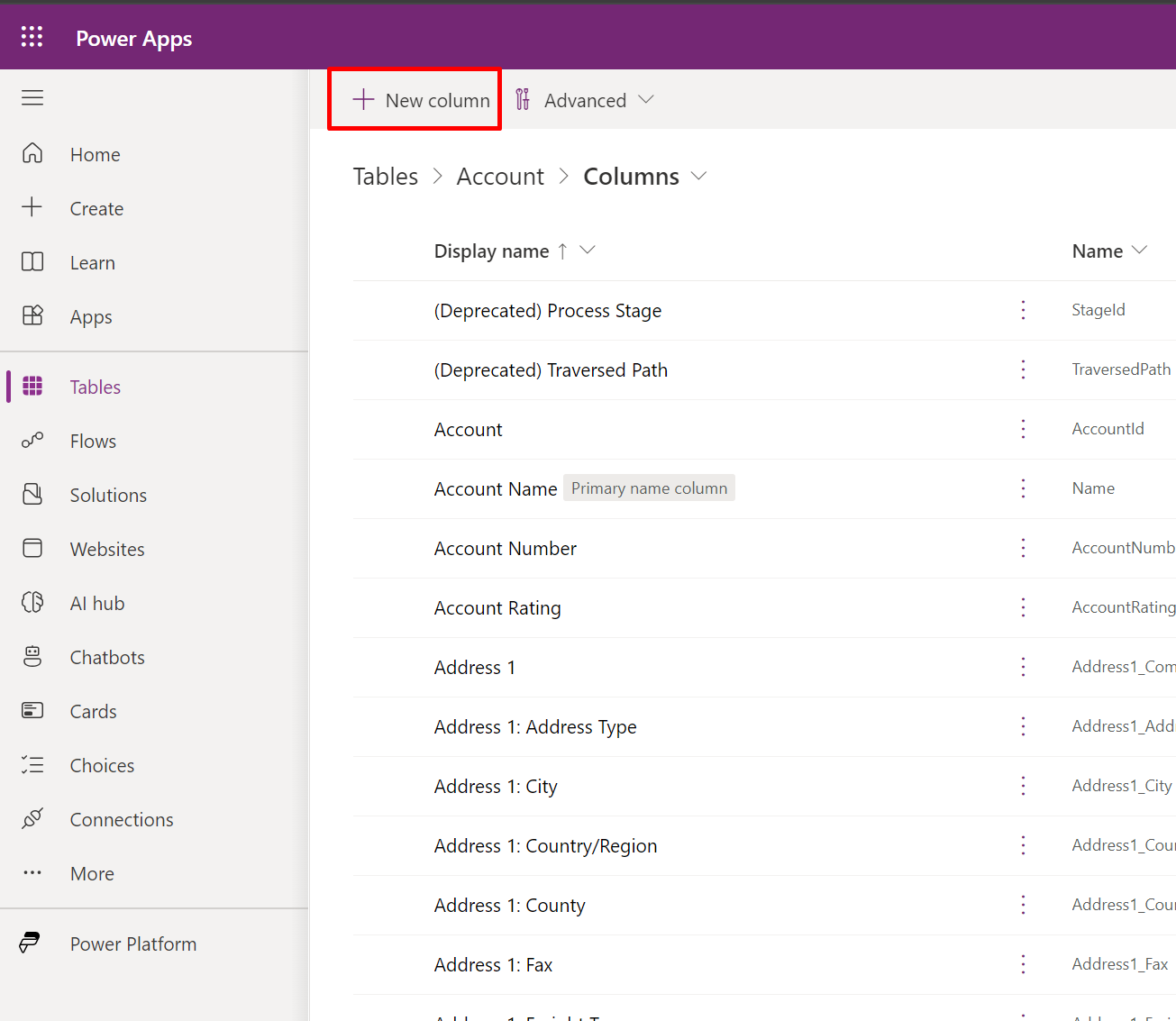

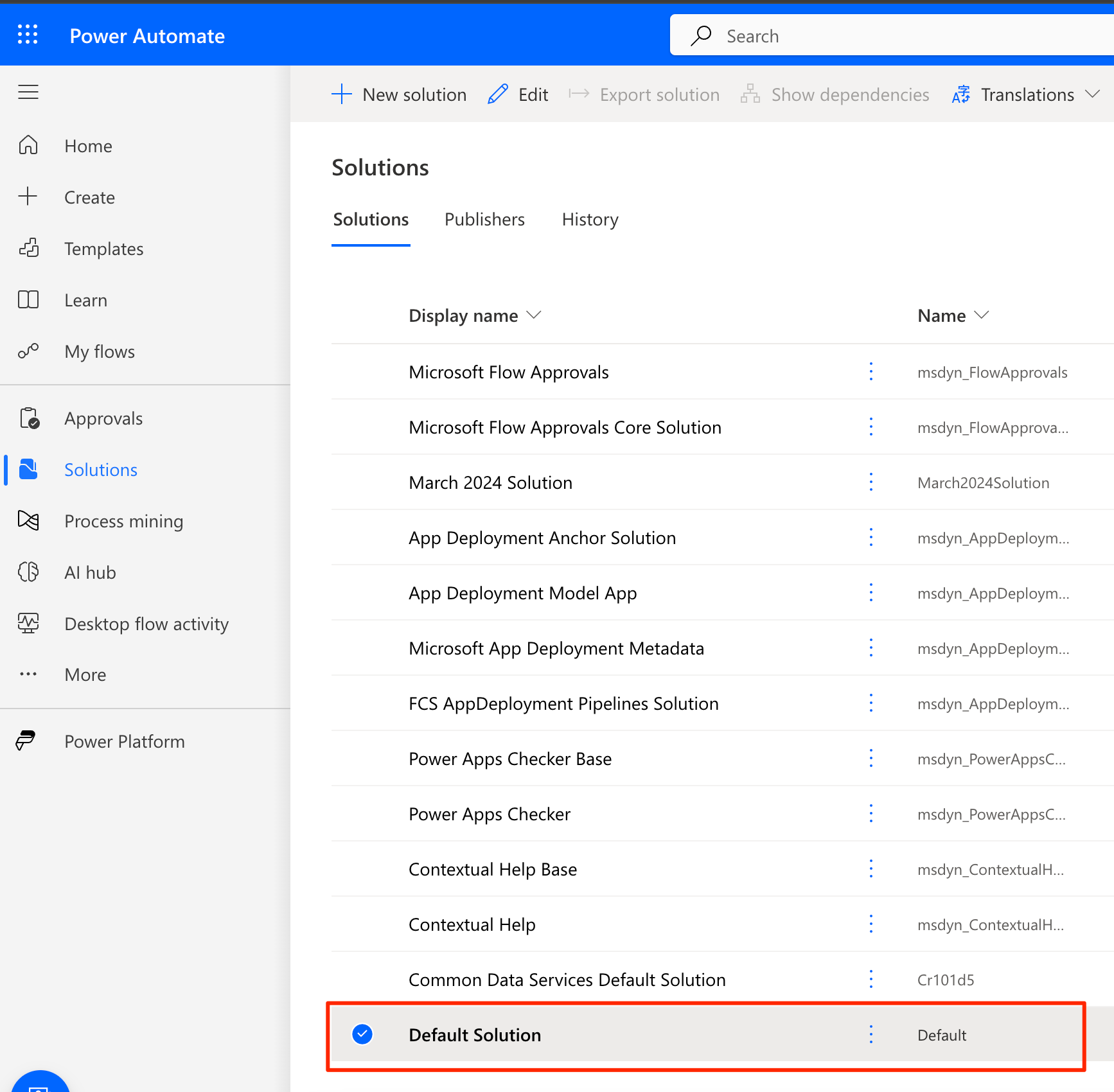

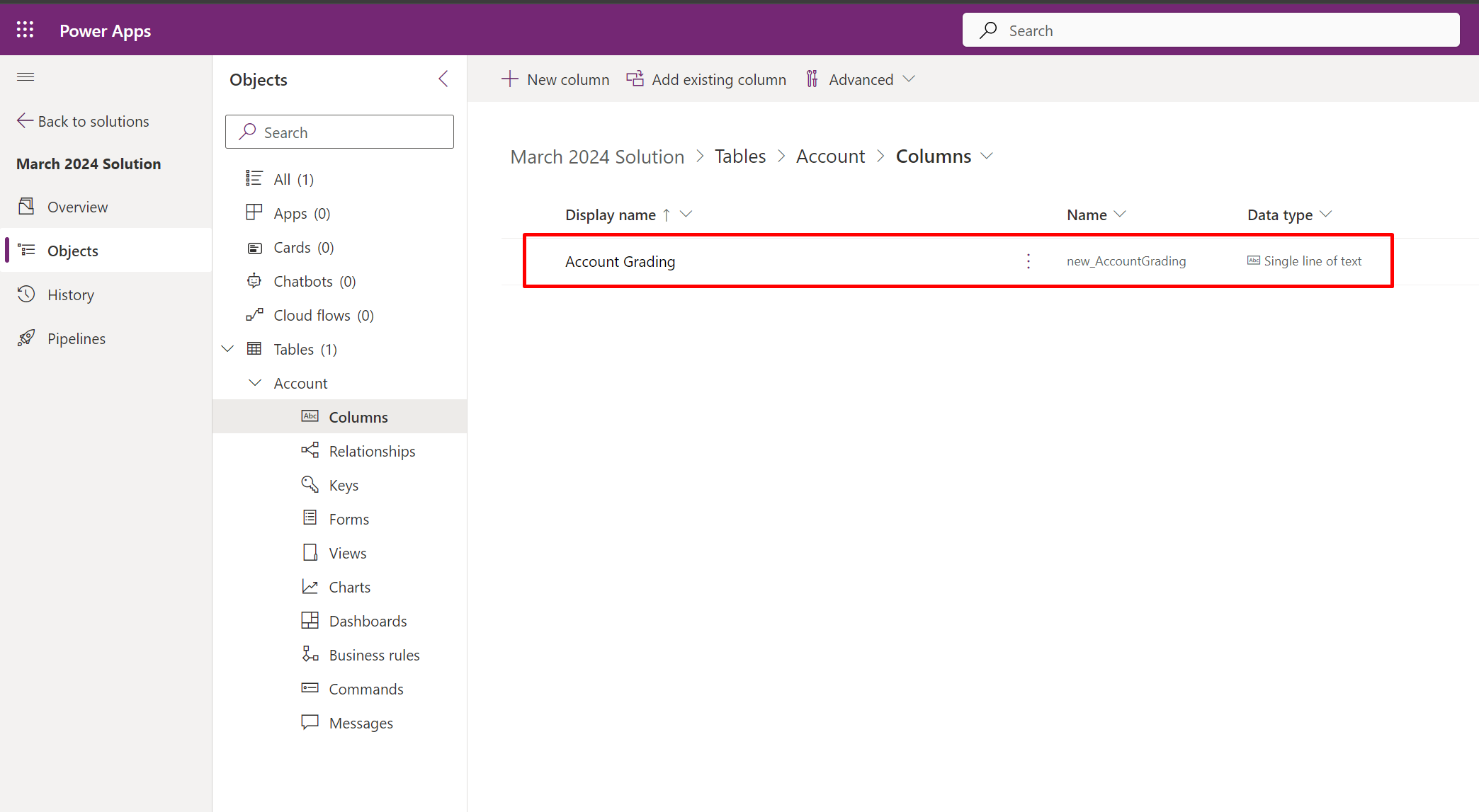

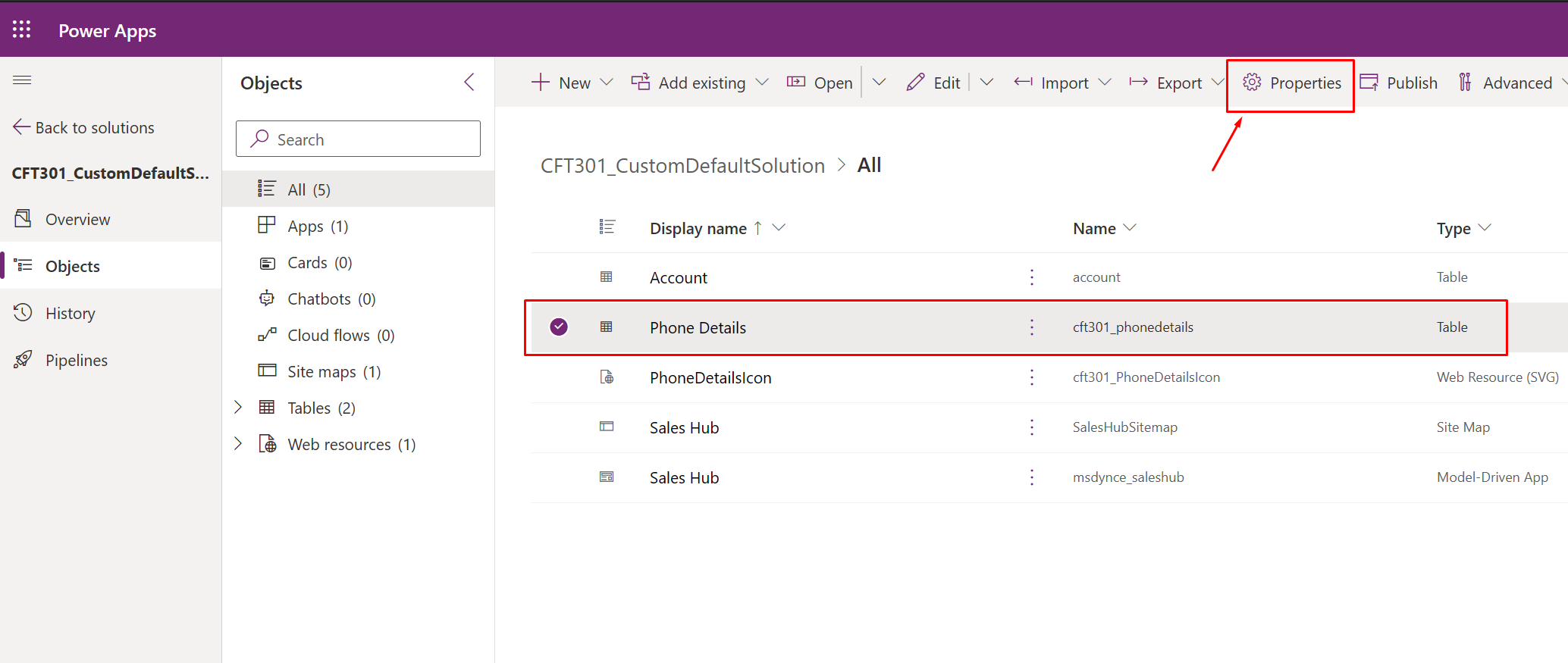

- In your solution, you have the table as well as the SVG Icon you just created the Web Resource for and uploaded an image which you want to set as Icon.

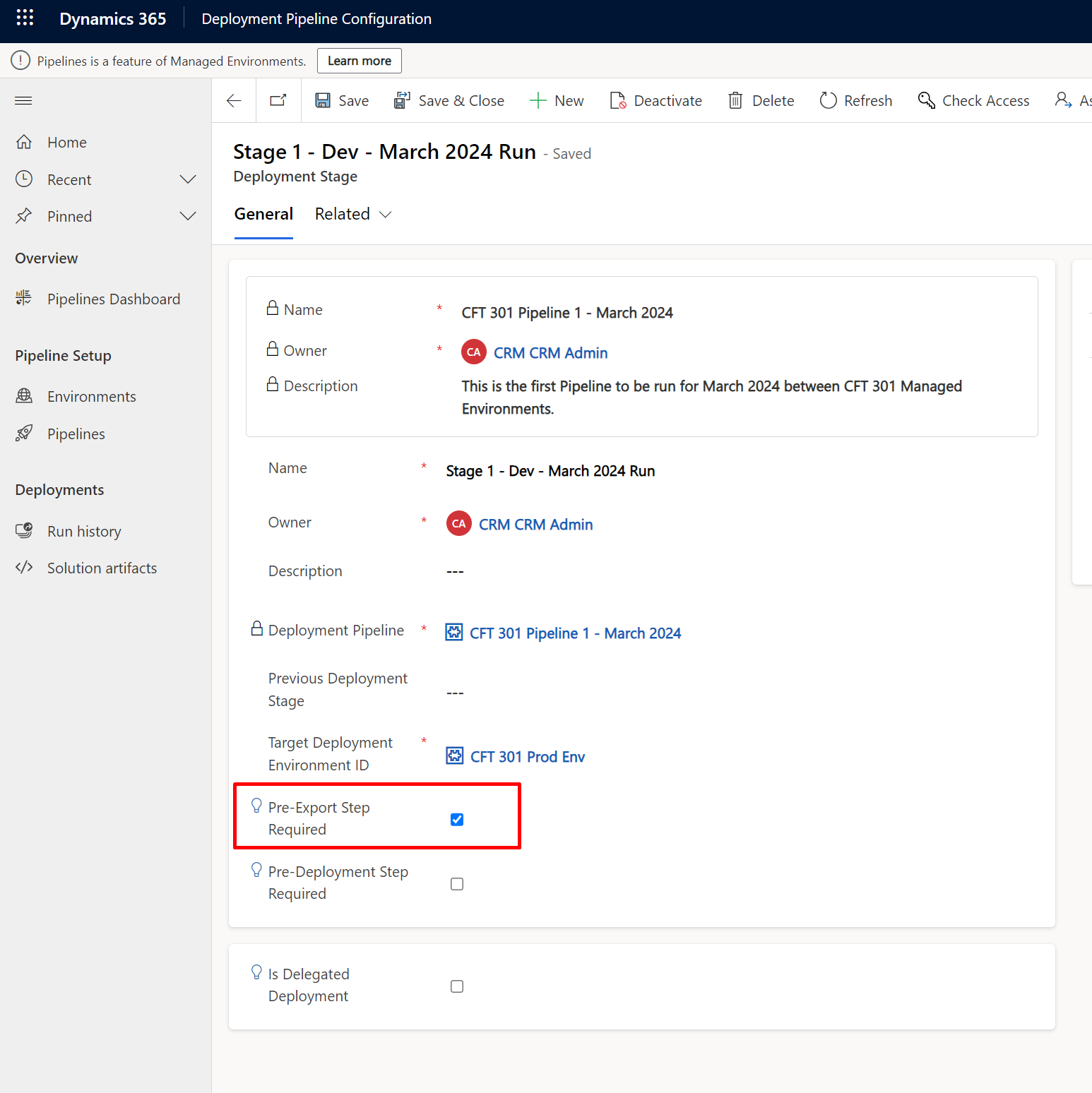

- Now, select the Table you want to set the SVG icon to, and click on Properties.

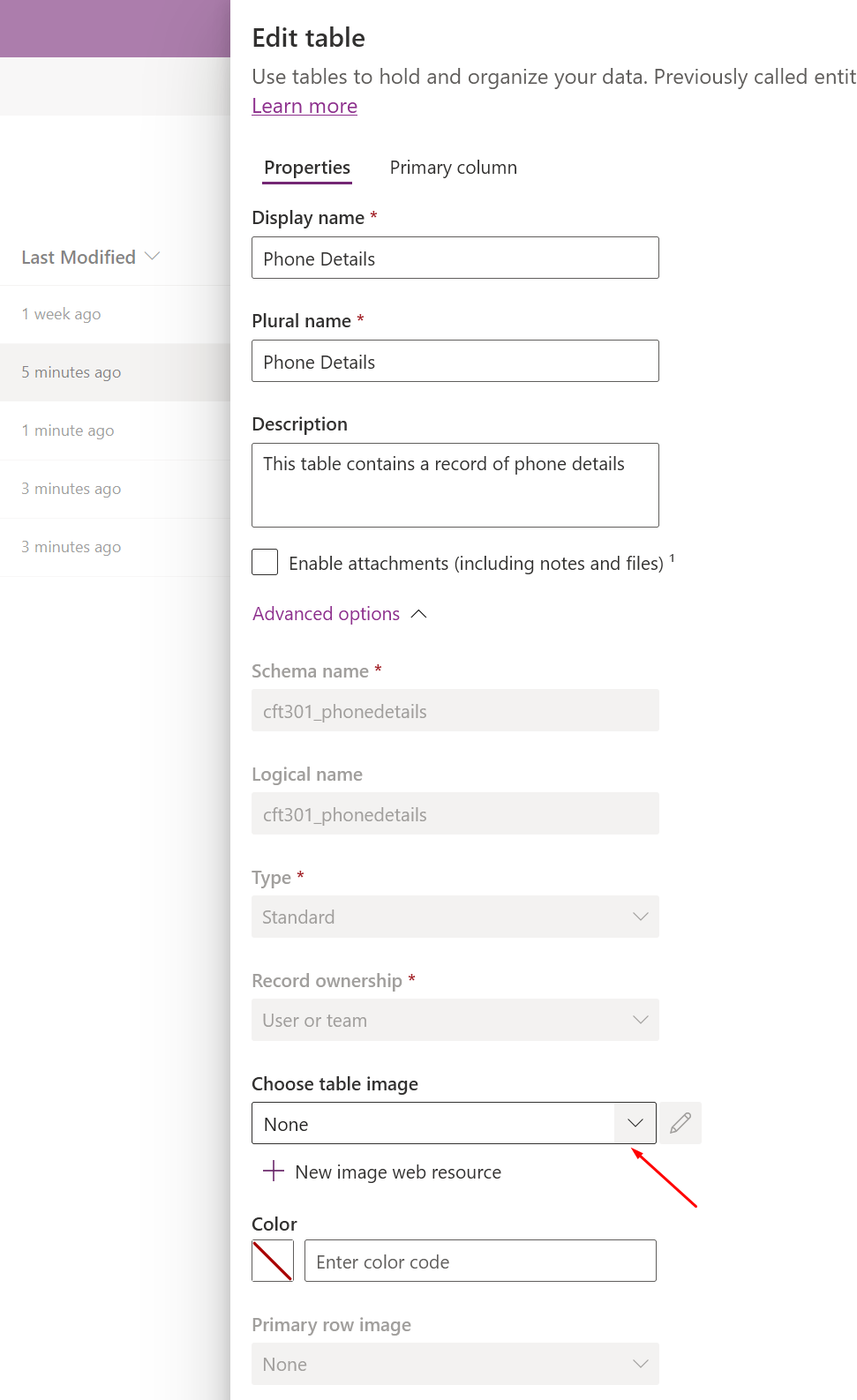

- On the right hand pane, expand the Advanced area and look for the Choose table image field.

- Then, start typing the Display Name of the SVG icon which you wish to set to this Entity.

Click Save if no other changes are to be done.

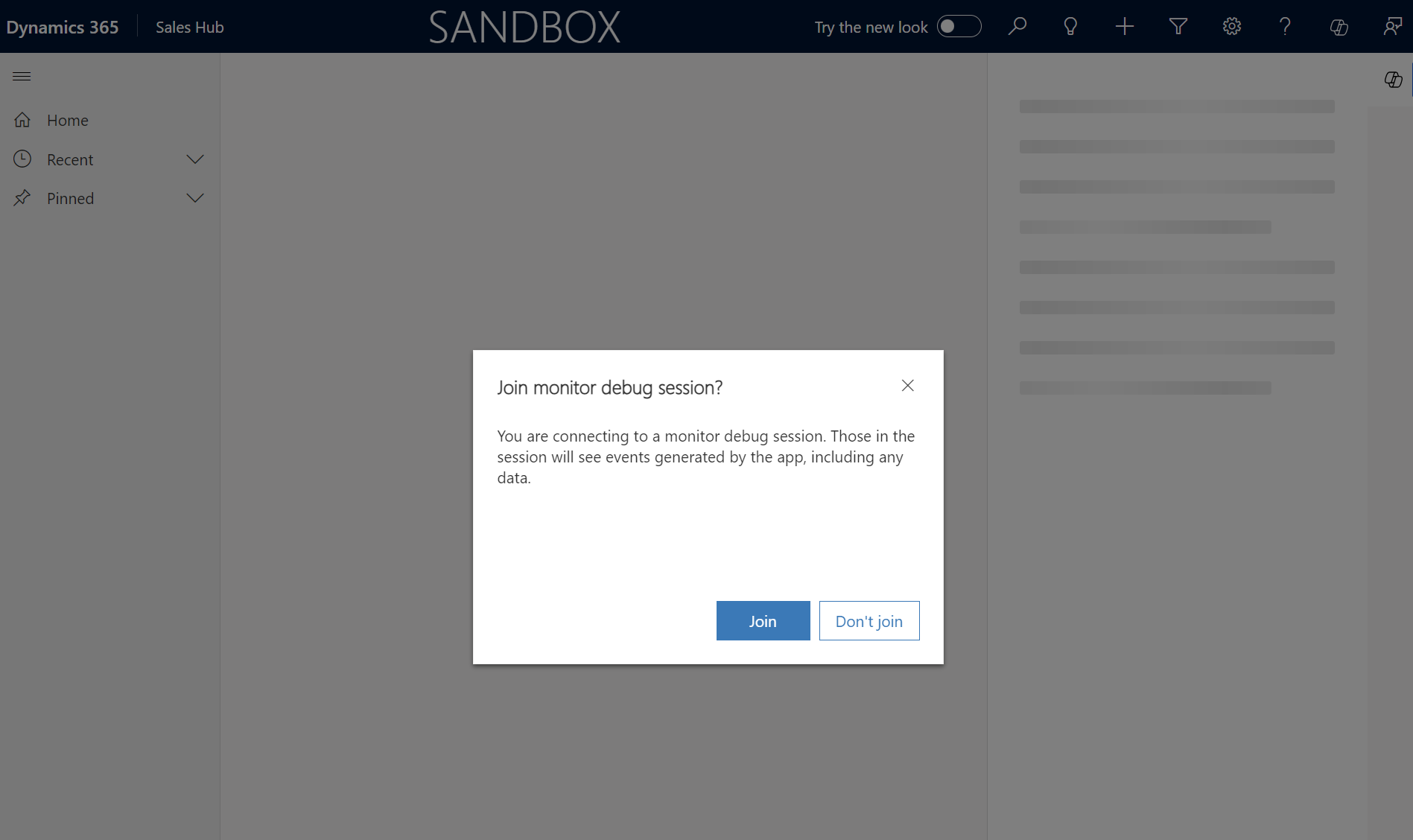

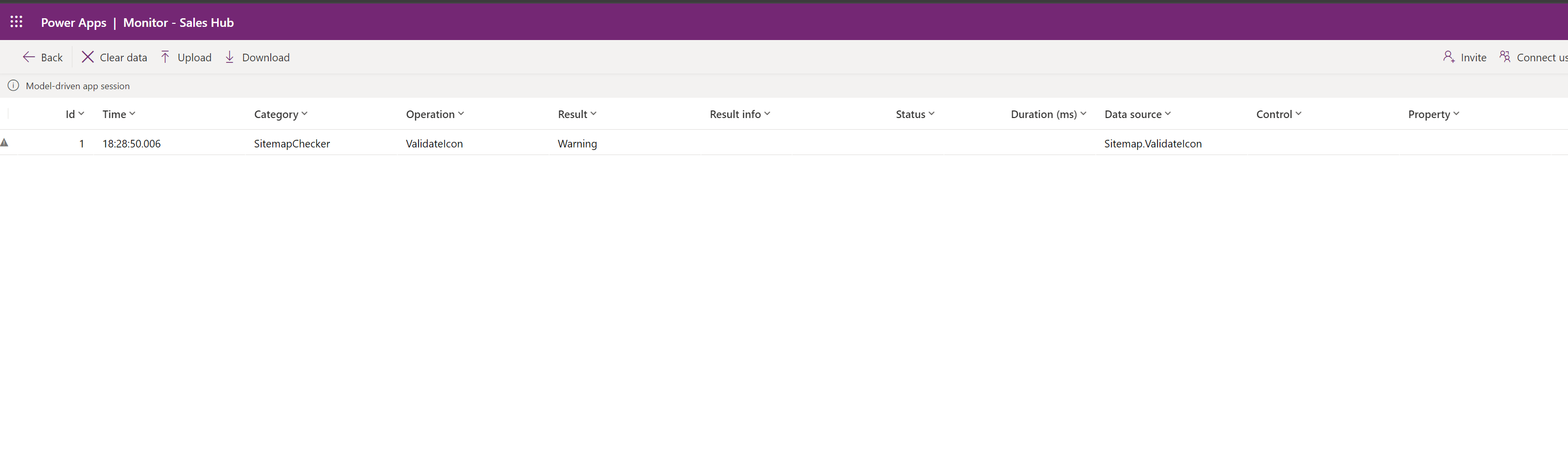

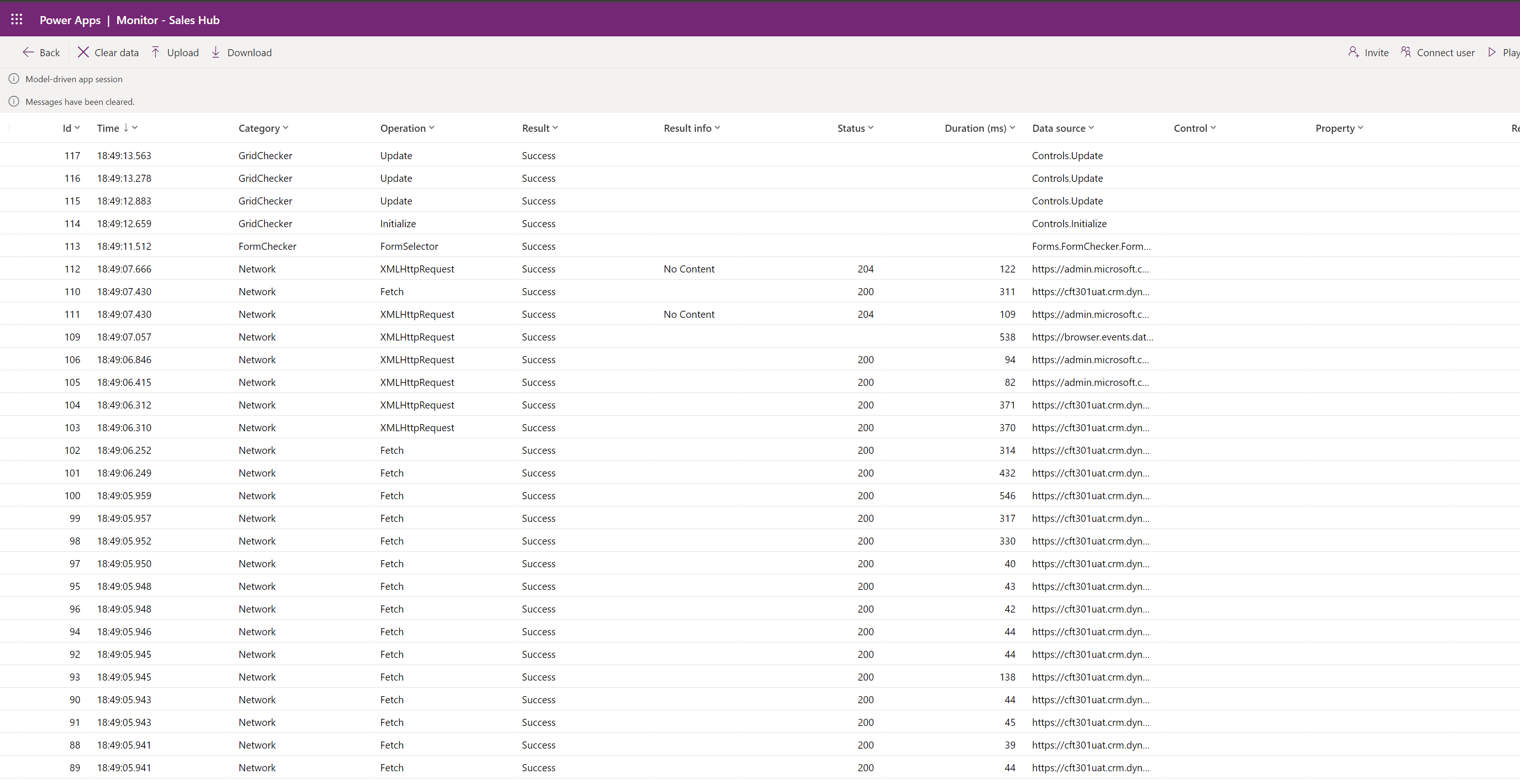

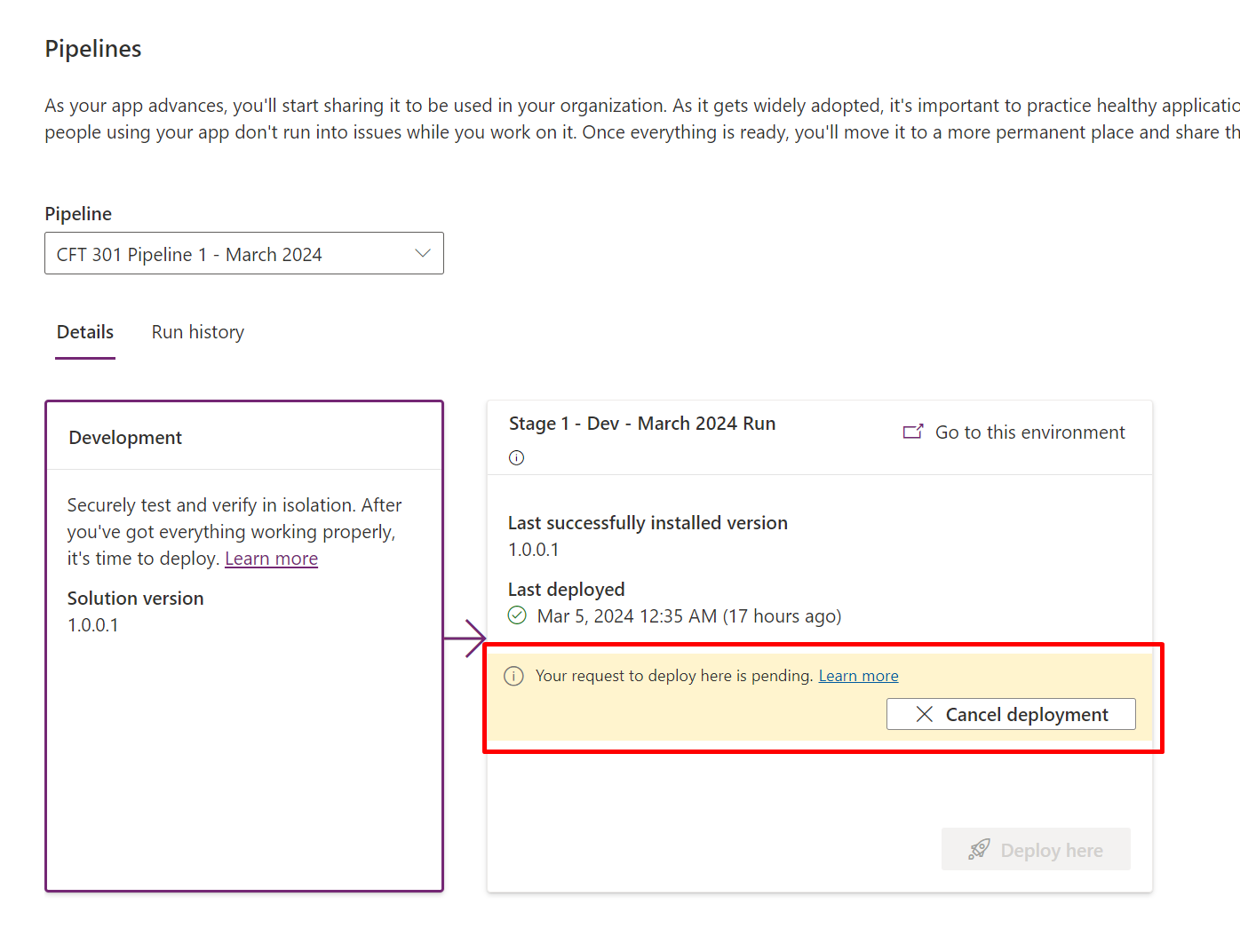

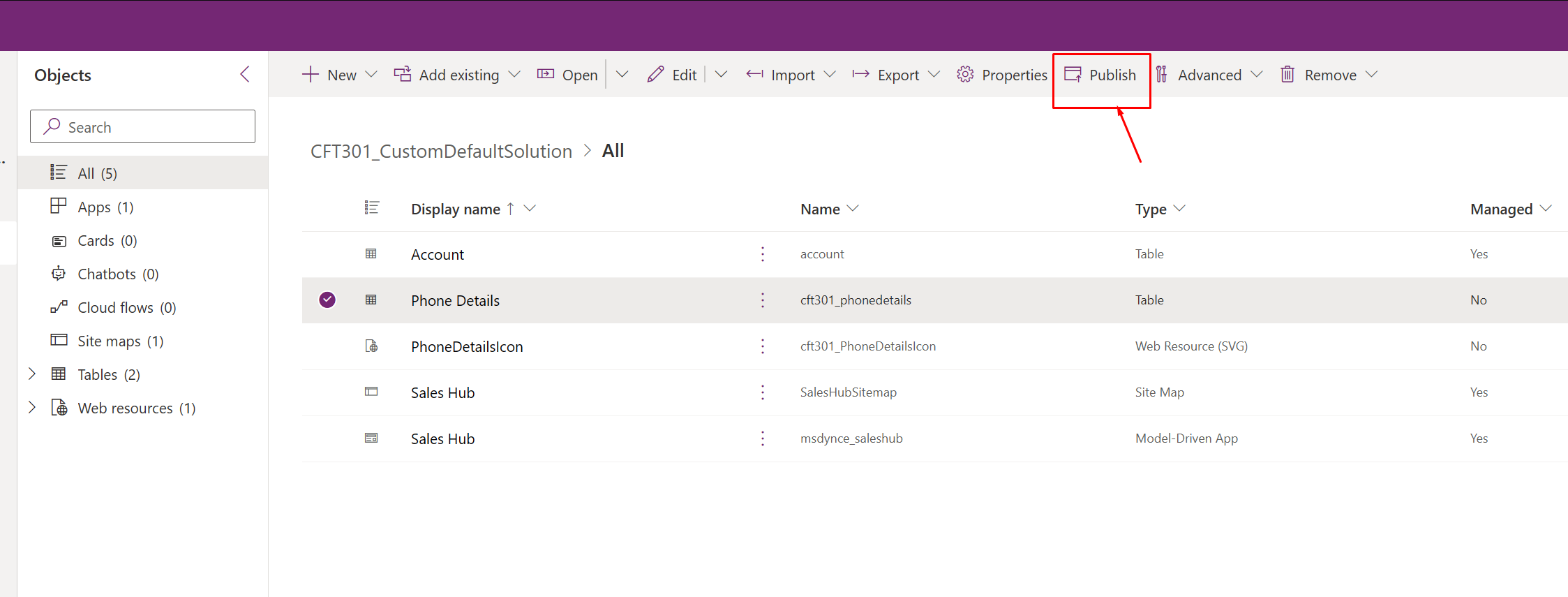

- Once Saved, click on Publish.

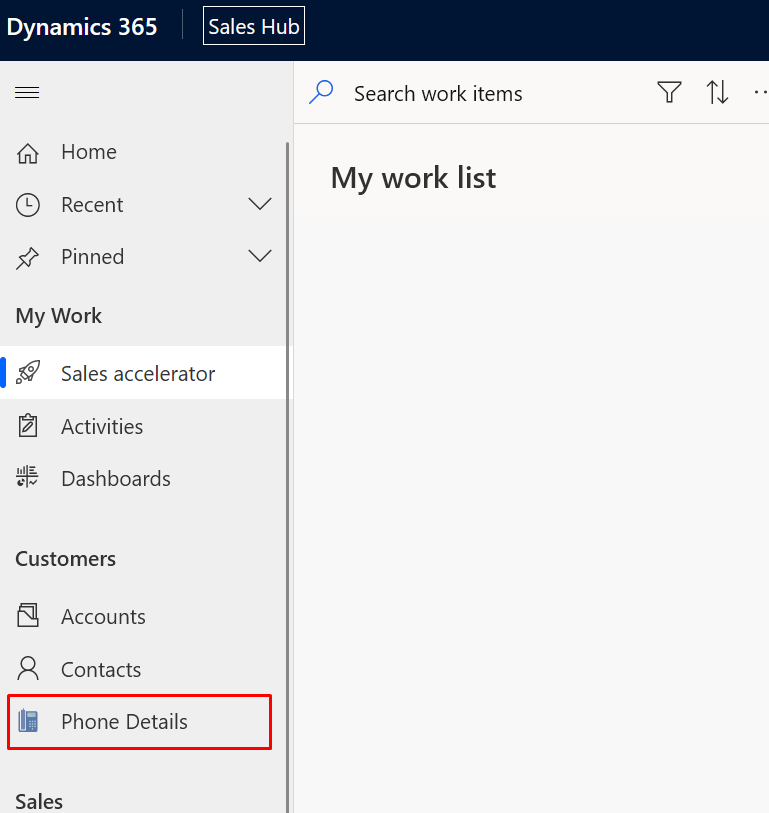

- Now, when you refresh the App where the custom entity is listed in the Sitemap, you’ll see the icon updated.

Hope this was useful!

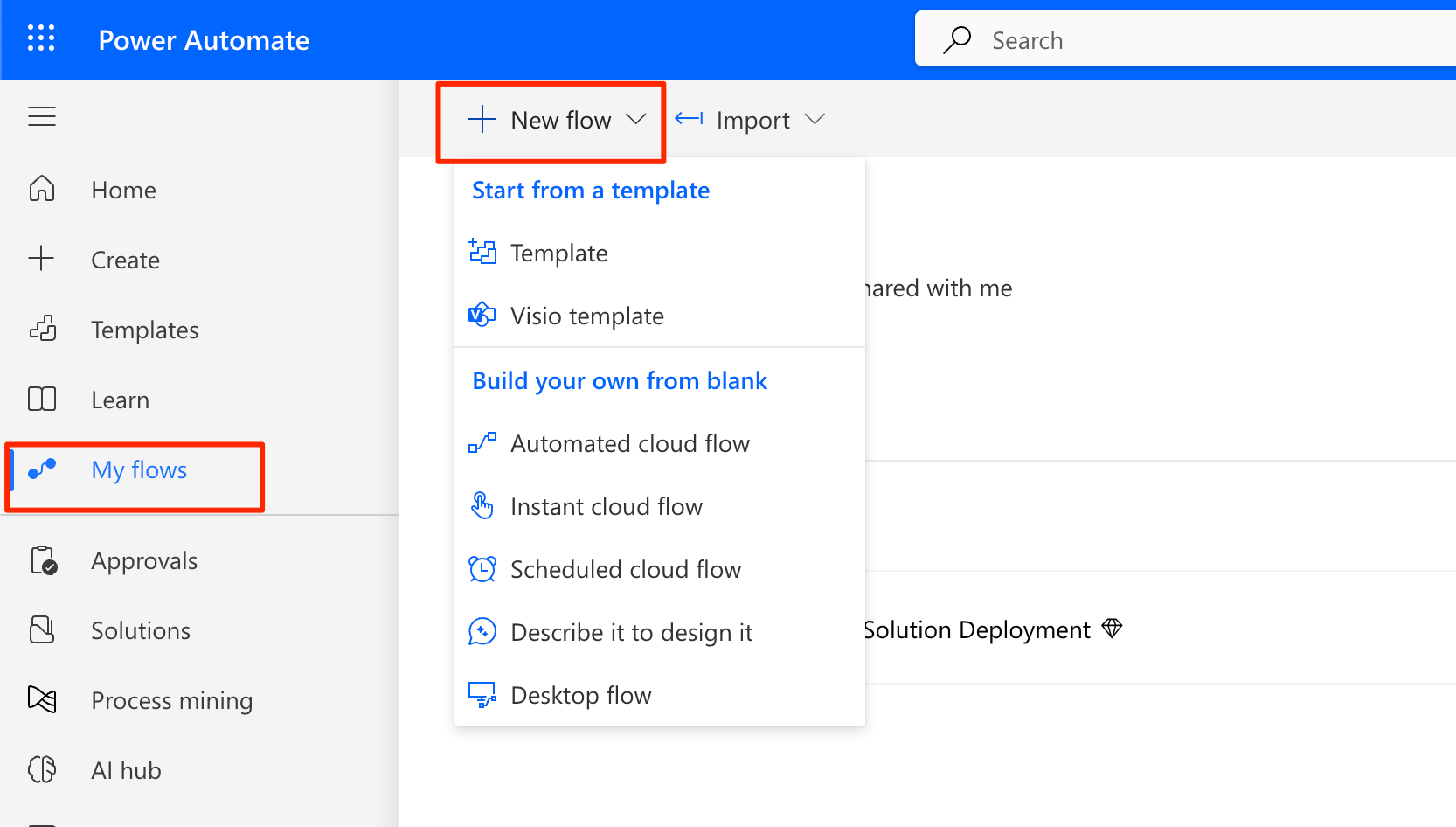

Here are some Power Automate posts you want to check out –

- Select the item based on a key value using Filter Array in Power Automate

- Select values from an array using Select action in a Power Automate Flow

- Blocking Attachment Extensions in Dynamics 365 CRM

- Upgrade Dataverse for Teams Environment to Dataverse Environment

- Showing Sandbox or Non Production Apps in Power App mobile app

- Create a Power Apps Per User Plan Trial | Dataverse environment

- Install On-Premise Gateway from Power Automate or Power Apps | Power Platform

- Co-presence in Power Automate | Multiple users working on a Flow

- Search Rows (preview) Action in Dataverse connector in a Flow | Power Automate

- Suppress Workflow Header Information while sending back HTTP Response in a Flow | Power Automate

- Call a Flow from Canvas Power App and get back response | Power Platform

- FetchXML Aggregation in a Flow using CDS (Current Environment) connector | Power Automate

- Parsing Outputs of a List Rows action using Parse JSON in a Flow | Common Data Service (CE) connector

- Asynchronous HTTP Response from a Flow | Power Automate

- Validate JSON Schema for HTTP Request trigger in a Flow and send Response | Power Automate

- Converting JSON to XML and XML to JSON in a Flow | Power Automate

Thank you!